From Shadow IT to Shadow AI: A Practitioner's Guide to Discovery and Containment

The Problem Has Evolved, But Our Response Hasn't

Shadow IT has been a governance challenge for some time now, escalating in correlation to bring-your-own-device (BYOD) practices and the sprawl of SaaS vendors. Employees adopt unauthorized technology to solve problems faster than IT can deliver solutions, a pattern familiar to anyone holding risk management responsibilities. What’s changed is the velocity and scope of shadow adoption in the AI era.

Shadow AI represents a fundamental shift in how unauthorized technology enters the enterprise. Where shadow IT required deliberate procurement decisions, shadow AI often arrives embedded in existing approved platforms. A marketing team using Adobe Creative Cloud suddenly has access to Firefly’s generative capabilities. Sales leverages conversation intelligence features built into their CRM without security review. Legal discovers contract analysis tools within their existing document management platform. The technology stack expands without a single new purchase order.

And in case you’ve been hiding under a rock, this isn’t hypothetical. In conversations with technology leaders across industries over the past year, I’ve documented cases where organizations discovered they were running three separate AI-powered copy writing platforms in marketing alone, conversation intelligence tools in sales that duplicated (and contradicted) CRM analytics, and HR teams expensing individual subscriptions to LLMs or AI-enhanced SaaS for “productivity enhancement” while enterprise pilots still went underutilized. Recent ISACA research confirms the scale of this challenge: 83% of IT and business professionals believe employees in their organization are using AI, yet only 31% of organizations have implemented formal, comprehensive AI policies.[1]

Addressing shadow AI requires adaptation to these specific adoption patterns, but the fundamental principles remain constant: visibility enables control, risk assessment drives prioritization, and sustainable programs balance security with operational reality.

Redefining the Scope

Traditional shadow IT governance assumes technology enters through procurement channels. Shadow AI requires broadening our definition of what constitutes a new capability requiring governance oversight.

Start by recognizing that business units are pursuing legitimate objectives through whatever means are available. Faster content generation, improved analysis, enhanced productivity, whatever label they put on it. The governance failure isn’t the business need; it’s the gap between need and approved solution delivery. Put differently: Don’t blame the business for wanting to be better.

Visibility becomes the foundational challenge. Finance emerges as a critical partner in this effort, but not through traditional IT cost center monitoring. Shadow AI subscriptions appear across expense categories: marketing software, productivity tools, professional development, even miscellaneous business expenses.

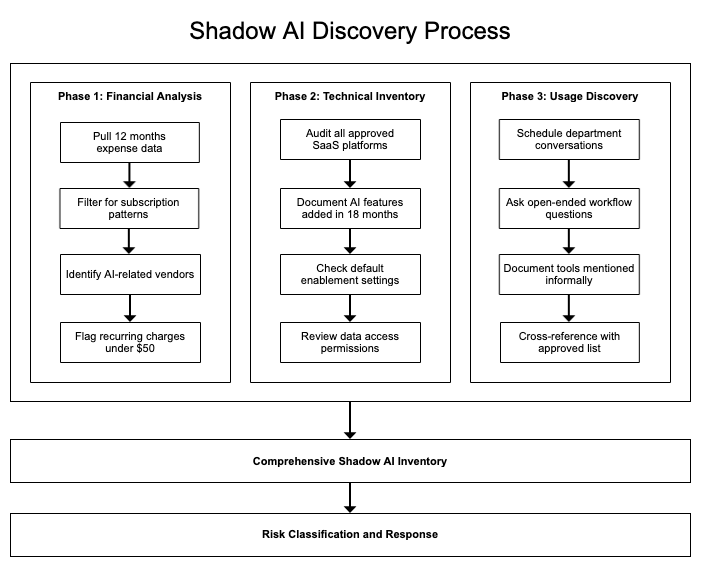

A comprehensive discovery approach requires three parallel efforts:

Expense Pattern Analysis: Review twelve months of corporate card and expense report data across all departments, not just IT-categorized software spending. Look for subscription patterns, monthly charges under $50 (which often bypass procurement thresholds), SaaS vendor names, and productivity tool expenses. The patterns emerge when you stop filtering by cost center and start looking at actual spending behavior.

SaaS Feature Inventory: Every approved platform in your environment likely added AI capabilities in the past 18 months. Major vendors launched generative AI features at unprecedented speed: Salesforce introduced Einstein GPT in March 2023,[2] Adobe released Firefly commercially in September 2023 (generating over 13 billion images since launch),[3] and Microsoft, Google Workspace, Zoom, and Slack followed with similar capabilities. These features may be enabled by default or available through existing licensing tiers. Document what capabilities exist, not just what applications are approved.

Usage Pattern Discovery: Technical monitoring captures part of the picture, but conversation captures the rest. Department-level discussions about workflow and process often reveal AI adoption that doesn’t appear in logs or expense reports. The legal team “experimenting” with Claude for contract review. Marketing using ChatGPT for campaign brainstorming. Finance testing AI-powered analysis tools. IT using AI code completion or more. People talk about the tools they use when you ask open-ended questions about how they actually get work done.

This discovery process aligns with fundamental governance principles. You cannot govern what you cannot see, and traditional IT asset management approaches provide incomplete visibility into AI adoption patterns.

Risk Management: Classifying Shadow AI Risk

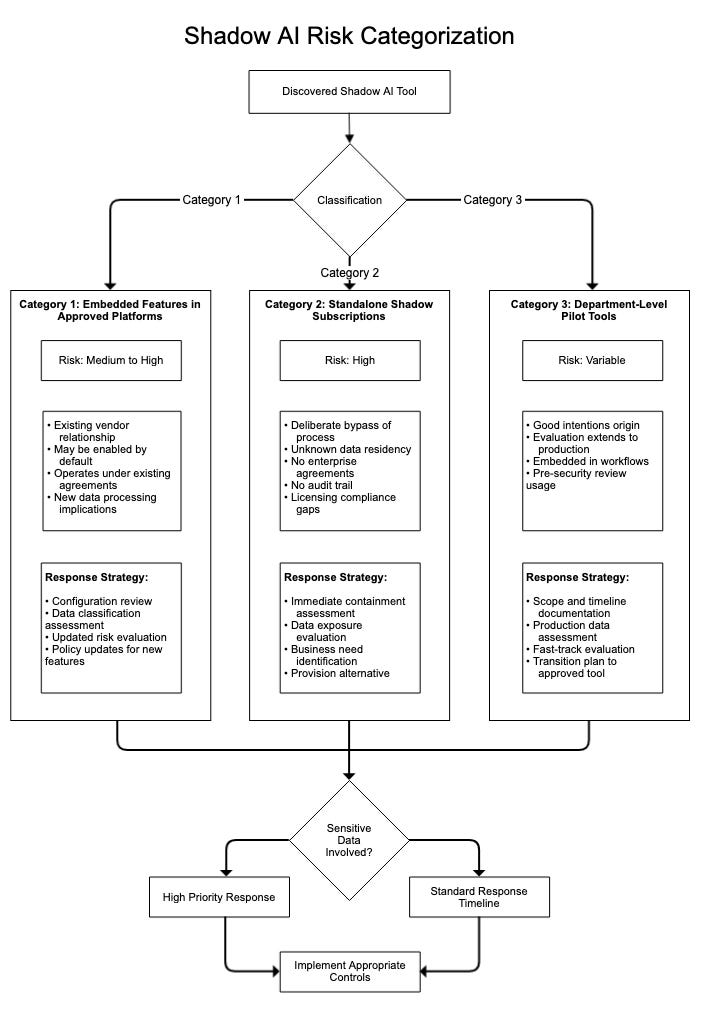

Not all shadow AI presents equivalent risk. Governance requires a classification approach that considers both the technology characteristics and the data environment, enabling risk-based decision making and resource allocation.

Category 1: Embedded Features in Approved Platforms

Risk Profile: Medium to High depending on data sensitivity and configuration

Example: Microsoft Copilot features within existing Microsoft 365 deployment

These capabilities often operate under existing vendor agreements but may introduce new data processing locations, model training considerations, or compliance implications. The governance challenge is that approval of the base platform doesn’t constitute approval of new AI features, yet these features may activate without explicit administrator consent.

Risk mitigation focuses on configuration management and data classification awareness. What data can these features access? Where is it processed? What are the model training policies? Most organizations approved Office 365 years ago, but far, far fewer have updated their risk assessments to account for Copilot capabilities.

Category 2: Standalone Shadow Subscriptions

Risk Profile: High

Example: Individual ChatGPT Plus subscriptions, Claude Pro, Google Gemini, Microsoft Copilot

These represent deliberate end-runs around governance processes. Users determined that approved solutions were insufficient and procured alternatives through personal or corporate card expenses. The risk extends beyond the AI capability itself to include unknown data residency and processing locations, lack of enterprise data protection agreements, potential exposure of proprietary information, no audit trail or usage monitoring, and licensing compliance gaps.

Note that I included Microsoft Copilot to the list of examples, as Microsoft announced the “bring your own Copilot to work”[4] capability, further muddying the waters of shadow AI governance and staff perceptions.

Category 3: Department-Level Pilot Tools

Risk Profile: Variable based on implementation

Example: Marketing team testing Jasper, Legal evaluating contract review platforms

These often begin with good intentions: business units exploring solutions to present to IT for formal adoption. The risk emerges when “evaluation” extends beyond intended timeframes, production data enters the environment, or the tool becomes embedded in operational processes before security review.

Risk management principles apply directly here: treat risk as a business decision rather than purely technical constraint. Some shadow AI represents acceptable risk given business value and data sensitivity. A marketing team using AI to generate social media post ideas with publicly available information presents different risk than finance using AI to analyze confidential projections.

Incident Response

Shadow AI discovery often occurs through incident response rather than proactive monitoring. A vendor security questionnaire reveals undocumented AI tools. An audit uncovers unapproved subscriptions, but a data breach at an AI vendor prompts review of what tools are actually in use.

Response requires calibrated action that addresses immediate risk without creating organizational resistance to legitimate AI adoption.

Immediate Response Framework

Containment Without Disruption: Disabling access to shadow AI tools that are embedded in business-critical workflows creates operational impact and user resistance. Initial response should focus on understanding scope of use, data exposure, and risk level before taking containment actions.

Evidence Preservation: Document what tools are in use, by whom, for what purposes, and what data has been processed. This information is essential for both risk assessment and for understanding the business need driving shadow adoption.

Business Impact Assessment: Shadow AI exists because it’s solving a problem. Understanding that problem is as important as addressing the security risk. If legal is using unapproved contract review tools, the question extends beyond containment to understanding why approved alternatives weren’t meeting their needs.

Post-Incident Actions

The goal isn’t punishment but process improvement. Shadow AI incidents reveal gaps in either solution delivery, communication about available tools, or approval process efficiency. Each discovery should inform governance enhancement.

Organizations that respond to shadow AI discovery with purely restrictive measures drive adoption further underground. Users become more sophisticated about hiding unauthorized tools and, simultaneously, corporate cards become useful for personal subscriptions with reimbursement requests buried in broader expense categories.

Response that couples risk mitigation with solution delivery creates compliance through viable paths rather than barriers. “We’re disabling this tool” paired with “here’s the approved alternative and how to access it within 48 hours” works. “We’re disabling this tool, full stop” doesn’t. Anybody who’s done this for a living - including myself - knows this reality.

Building Sustainable Controls

Shadow AI requires controls that acknowledge the speed of AI capability evolution and the legitimate business pressure to adopt these tools. The control environment must be sustainable and scalable while remaining aligned with organizational objectives.

Layered Control Approach

Technical Controls: SaaS discovery tools that monitor for new AI-related domains and subscriptions. Data loss prevention policies that flag sensitive information being uploaded to AI platforms. Network monitoring for AI service API calls from unapproved sources. Automated expense report scanning for AI-related subscription patterns.

Administrative Controls: Streamlined AI tool approval processes with defined SLAs (two-week turnaround for low-risk tools). Clear guidance on what constitutes approved AI use. Regular communication about available enterprise AI capabilities. Simplified exception request processes for unique business needs.

Preventive Controls: Proactive business unit engagement to understand upcoming AI needs before shadow adoption occurs. Pre-approved AI tool catalog with documented use cases and access procedures. Procurement threshold adjustments that capture AI subscription spending. Partnership with finance to flag recurring software charges.

Resource allocation principles are critical here. Organizations cannot manually review every AI tool request or monitor every potential shadow adoption vector. The control environment must be efficient enough to be sustainable.

Operational Realities and Governance Design

Academic research provides important context for understanding shadow adoption patterns. A 2014 study by Silic and Back found that employees extensively use Shadow IT software that “leverages their productivity and enables faster and better collaboration and communication.”[5] Subsequent research has confirmed these findings, with studies demonstrating positive relationships between shadow IT usage and individual performance.[6] Business units rarely bypass IT processes out of malice or disregard for security; they bypass them because the approval process takes longer than the business deadline, the approved alternative lacks critical functionality, previous requests were denied without clear explanation, they weren’t aware an approved solution existed, or the “approved” tool requires weeks of access provisioning.

Each of these represents a governance process failure, not necessarily just a user compliance failure. Governance design that addresses root causes rather than layering additional restrictions on top of broken processes stands a better chance of actually working.

The velocity problem is particularly acute with AI tools, where a business unit can identify a need, find a solution, and begin using it within hours while traditional IT approval cycles measured in weeks or months struggle to keep pace. Shadow adoption becomes inevitable when the gap between need identification and solution delivery exceeds the time required to procure an unauthorized alternative.

This reality suggests governance design principles specific to AI adoption.

Pre-approved Tool Catalogs: Maintain a vetted list of AI tools for common use cases with instant access provisioning. Marketing needs copywriting assistance? Here are three approved options with documented capabilities, pricing, and access instructions. Legal needs contract review? Pre-vetted alternatives ready for immediate use.

Risk-Based Approval Tiers: Low-risk tools (no sensitive data, limited integration, reputable vendor) should have accelerated approval paths measured in days, not weeks. High-risk tools (sensitive data processing, extensive integrations, data residency concerns) warrant longer review cycles. The mistake - a self-designed trap, really - is treating all AI tools as uniformly high-risk.

Transparent Decision Communication: When AI tool requests are denied, document why and provide approved alternatives. “No, you can’t use Tool X because of data residency concerns, but Tool Y provides similar functionality and meets our requirements. Here’s how to access it.” Users who understand reasoning are more likely to comply.

Fundamental Recognition

Shadow AI, like shadow IT before it, emerges from a gap between business need and approved solution availability. Governance frameworks provide structure for addressing this challenge, but successful implementation requires acknowledging an uncomfortable truth: nearly every shadow purchase represents someone trying to solve a problem faster than IT can deliver solutions.

The organizations seeing the least shadow AI aren’t those with the most restrictive policies. They’re the ones with the fastest approved alternative delivery. When the path of least resistance leads through approved channels rather than around them, compliance follows naturally.

Risk management isn’t about eliminating shadow technology adoption, it’s about making approved paths easier than unapproved ones while maintaining visibility into the inevitable exceptions. Execution requires recognizing that governance serves the business, not the other way around.

Shadow IT containment strategies may prove more central to enterprise AI governance than formal AI adoption roadmaps. The technology arriving through the back door often reveals business needs that front-door processes failed to address. Understanding that pattern and designing governance that responds to it becomes the foundation for sustainable AI governance in an environment where capability evolution outpaces traditional approval cycles.

Governance that ignores the speed of business need will be circumvented, and that’s just the harsh reality to which we need to adapt. Your choice is whether to acknowledge that reality and respond accordingly or continue discovering shadow AI through incident response rather than proactive management.

CREDITS: Claude Sonnet 4.5 editorial review; Sora for banner image generation.

References

[1] ISACA. (2025, June 25). AI use is outpacing policy and governance, ISACA finds. Retrieved from https://www.isaca.org/about-us/newsroom/press-releases/2025/ai-use-is-outpacing-policy-and-governance-isaca-finds

[2] Salesforce. (2023, March 7). Salesforce announces Einstein GPT, the world’s first generative AI for CRM. Retrieved from https://www.salesforce.com/news/press-releases/2023/03/07/einstein-generative-ai/

[3] Adobe. (2024, October 14). Adobe launches Firefly Video Model and enhances image generation. Retrieved from https://news.adobe.com/news/downloads/pdfs/2024/10/101424-adobemax-firefly.pdf

[4] Microsoft. (2025, October 1). Employees can bring Copilot from their personal Microsoft 365 plans to work - what it means for IT. Retrieved from https://techcommunity.microsoft.com/blog/microsoft365copilotblog/employees-can-bring-copilot-from-their-personal-microsoft-365-plans-to-work---wh/4458212

[5] Silic, M., & Back, A. (2014). Shadow IT – A view from behind the curtain. Computers & Security, 45, 274-283. https://doi.org/10.1016/j.cose.2014.06.007

[6] Mallmann, G. L., & Maçada, A. C. G. (2021). The mediating role of social presence in the relationship between shadow IT usage and individual performance: A social presence theory perspective. Behaviour & Information Technology, 40(4), 427-441. https://doi.org/10.1080/0144929X.2019.1702100